The Ultimate Guide to Prompt Engineering – Techniques, Examples, and Success Stories

This comprehensive guide will delve deep into the intricacies of prompt engineering, exploring its evolution, core concepts, advanced techniques, practical applications, success stories, challenges, and future prospects.

1. The Evolution of Prompt Engineering

Early Days: Rule-Based Systems

In the early days of AI, interactions were primarily based on rigid, rule-based systems. Users had to learn specific commands or query structures to interact with these systems effectively.

With advancements in Natural Language Processing (NLP), systems became more flexible, allowing for more natural language inputs. However, these systems still required careful phrasing to get accurate results.The introduction of GPT models marked a significant shift. These models could generate human-like text, opening up new possibilities for interaction.

The Emergence of Prompt Engineering

As models like GPT-3 demonstrated remarkable capabilities, the focus shifted from model development to input crafting. This gave birth to prompt engineering as a distinct discipline.

2. Core Concepts in Prompt Engineering

Anatomy of a Prompt

-

- Instruction: The core task or question.

- Context: Background information.

- Input Data: Specific information for the task.

- Output Format: Desired structure of the response.

- Examples: Demonstrations of expected input-output pairs.

Types of Prompts

-

- Zero-Shot Prompts: No examples provided.

- One-Shot Prompts: One example provided.

- Few-Shot Prompts: Multiple examples provided.

- Chain-of-Thought Prompts: Step-by-step reasoning process.

Key Principles

-

- Clarity: Be specific and unambiguous.

- Conciseness: Provide necessary information without verbosity.

- Relevance: Include only pertinent details.

- Consistency: Maintain a uniform style and format.

3. Advanced Prompt Engineering Techniques

1. Role-Playing and Persona Assignment

-

- Technique: Assign a specific role or persona to the AI.

- Example: “You are a senior data scientist with 10 years of experience. Explain the concept of feature engineering to a junior developer.”

- Benefits: Tailors the response style and depth to the assigned role.

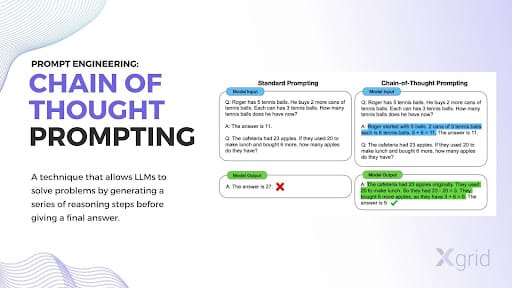

2. Chain-of-Thought (CoT) Prompting

-

- Chain-of-thought prompting is a technique in prompt engineering designed to enhance language models’ performance on tasks involving logic, calculation, and decision-making. It achieves this by organizing the input prompt in a manner that simulates human reasoning.

To create a chain-of-thought prompt, users generally add an instruction like “Describe your reasoning step by step” or “Explain your answer in a series of steps” to their query for a large language model (LLM). This approach essentially requests the LLM to produce not only the final outcome but also to outline the sequence of intermediate steps that lead to that conclusion.

-

- Benefits: Improves accuracy for complex reasoning tasks.

3. Self-Consistency

-

- Technique: Generate multiple responses and select the most consistent one.

- Example: Ask the same question multiple times with slight variations, then compare answers.

- Benefits: Reduces errors and improves reliability.

4. Guardrails

-

- Technique: Implement ethical guidelines and constraints within the prompt.

- Example: “Provide investment advice while adhering to ethical financial practices and emphasizing the importance of diversification.”

- Benefits: Ensures AI responses align with specific values or principles.

5. Prompt Chaining

-

- Technique: Use the output of one prompt as input for another.

- Example:

- First prompt: “Summarize the key points of climate change.”

- Second prompt: “Based on the summary [insert previous output], suggest three policy recommendations.”

- Benefits: Allows for complex, multi-step tasks.

6. Meta-Prompting

-

- Technique: Use prompts to generate or improve other prompts.

- Example: “Generate a prompt that would help a language model write a compelling short story.”

- Benefits: Helps in creating more effective prompts.

7. Retrieval-Augmented Generation (RAG)

-

- Technique: Combine prompt engineering with information retrieval from external sources.

- Example: “Using the latest data from [specific source], analyze the trends in renewable energy adoption.”

- Benefits: Enhances responses with up-to-date or specialized information.

8. Prompt Templating

-

- Technique: Create reusable prompt structures with placeholders for variable inputs.

Example:

Template: "Translate the following {source_language} text to {target_language}: {text}"

Usage: "Translate the following English text to French: 'Hello, how are you?'"

-

- Benefits: Ensures consistency and saves time for repetitive tasks.

5. Prompt Engineering for Specific Tasks

Natural Language Processing

- Text Summarization

- Technique: Specify desired length and focus areas.

- Example: “Summarize the following article in 3 bullet points, focusing on economic impacts.”

- Sentiment Analysis

- Technique: Request specific sentiment categories and justification.

- Example: “Classify the sentiment of this tweet as positive, negative, or neutral. Provide reasoning for your classification.”

- Named Entity Recognition

- Technique: Clearly define entity types and format.

- Example: “Identify all person names, locations, and organizations in the following text. Format the output as a JSON object.”

Creative Writing

- Story Generation

- Technique: Provide detailed prompts with character descriptions, setting, and plot elements.

- Example: “Write a short story set in a dystopian future where water is scarce. Include a young protagonist and a moral dilemma.”

- Poetry Creation

- Technique: Specify style, theme, and structure.

- Example: “Compose a haiku about autumn leaves. Follow the 5-7-5 syllable structure.”

Programming and Code Generation

- Code Writing

- Technique: Clearly state the programming language, desired functionality, and any specific requirements.

- Example: “Write a Python function that calculates the Fibonacci sequence up to n terms. Use recursion and include error handling for invalid inputs.”

- Code Explanation

- Technique: Request line-by-line explanations or focus on specific aspects.

- Example: “Explain the following JavaScript code, focusing on the use of closures and their benefits.”

Data Analysis

- Data Interpretation

- Technique: Provide context about the data and specify the type of insights needed.

- Example: “Given this sales data for the past year, identify the top 3 trends and suggest potential reasons for these patterns.”

- Statistical Analysis

- Technique: Clearly state the hypothesis and required statistical tests.

- Example: “Perform a t-test to determine if there’s a significant difference between the two groups in this dataset. Explain the results and their implications.”

6. Case Studies and Success Stories

Case Study 1: OpenAI’s GPT-3 and DALL-E

- Success: Using carefully crafted prompts, researchers demonstrated GPT-3’s ability to perform tasks it wasn’t explicitly trained for, such as basic arithmetic and code generation.

- Key Technique: Few-shot learning, where a few examples were provided in the prompt to guide the model’s behavior.

Case Study 2: GitHub Copilot

- Success: Leveraging prompt engineering techniques, GitHub Copilot can generate code snippets and entire functions based on natural language descriptions or existing code context.

- Key Technique: Contextual prompting, where the surrounding code and comments serve as an implicit prompt.

Case Study 3: AI-Assisted Medical Diagnosis

- Success: Researchers at Stanford University used GPT-3 with engineered prompts to assist in medical diagnosis, achieving accuracy comparable to human doctors in certain scenarios.

- Key Technique: Chain-of-thought prompting, guiding the model through the diagnostic process step-by-step.

Case Study 4: Legal Document Analysis

- Success: Law firms have used prompt engineering to extract key information from large volumes of legal documents, significantly reducing manual review time.

- Key Technique: Structured output prompting, where the desired format of the extracted information is specified in detail.

Case Study 5: Personalized Education

- Success: Educational platforms have used prompt engineering to create adaptive learning experiences, generating personalized explanations and practice problems.

- Key Technique: Role-playing prompts, where the AI takes on the persona of a tutor tailored to the student’s learning style.

7. Challenges and Limitations in Prompt Engineering

1. Prompt Sensitivity

- Challenge: Small changes in prompt wording can lead to significant differences in output.

- Solution Approach: Rigorous testing and iterative refinement of prompts.

2. Model Hallucinations

- Challenge: AI models can generate plausible but incorrect information.

- Solution Approach: Implement fact-checking prompts and cross-reference with reliable sources.

3. Ethical Considerations

- Challenge: Ensuring prompts don’t lead to biased or harmful outputs.

- Solution Approach: Develop ethical guidelines for prompt creation and implement content filtering.

4. Scalability

- Challenge: Creating prompts that work consistently across different models and versions.

- Solution Approach: Develop modular and adaptive prompt structures.

5. Prompt Injection Attacks

- Challenge: Malicious users might try to override intended behaviors through carefully crafted inputs.

- Solution Approach: Implement robust input validation and model fine-tuning to resist such attacks.

8. The Future of Prompt Engineering

Automated Prompt Optimization

- Prediction: AI systems that can automatically generate and refine prompts for optimal performance.

- Potential Impact: Democratization of AI capabilities, allowing non-experts to leverage advanced AI functionalities.

Multimodal Prompting

- Prediction: Integration of text, image, audio, and video inputs in prompts.

- Potential Impact: More nuanced and context-rich interactions with AI systems.

Collaborative AI Systems

- Prediction: Prompt engineering techniques that enable multiple AI models to work together on complex tasks.

- Potential Impact: Solving previously intractable problems by combining specialized AI capabilities.

Personalized AI Interactions

- Prediction: Prompts that adapt to individual user preferences and interaction styles.

- Potential Impact: More natural and effective human-AI collaboration.

Standardization and Best Practices

- Prediction: Emergence of industry standards and certifications for prompt engineering.

- Potential Impact: Improved reliability and interoperability of AI systems across different platforms.

9. Conclusion

Prompt engineering stands at the intersection of human creativity and artificial intelligence, unlocking unprecedented capabilities in AI systems. As we’ve explored in this comprehensive guide, mastering the art and science of prompt engineering opens doors to more efficient, accurate, and innovative AI applications across various domains.

The field is rapidly evolving, with new techniques and best practices emerging regularly. Success in prompt engineering requires a deep understanding of both the capabilities and limitations of AI models, as well as the nuances of human language and problem-solving processes.

As we look to the future, prompt engineering will likely play an increasingly crucial role in shaping how we interact with and leverage AI technologies. Whether you’re a developer, researcher, business leader, or simply an AI enthusiast, developing skills in prompt engineering will be invaluable in navigating and harnessing the power of artificial intelligence.

By continually refining our approach to prompt engineering, we can push the boundaries of what’s possible with AI, creating more intelligent, responsive, and beneficial systems that enhance human capabilities and drive innovation across all sectors of society.